I tried my best to pull together a list of useful links related to implementing security within WCF services as recommended by the published patterns and practices.

Transport Layer Security Patterns and Practices (a little older but concepts are still relevant)

http://msdn.microsoft.com/en-us/library/ff650659.aspx

WCF Security Fundamentals Patterns and Practices

http://msdn.microsoft.com/en-us/library/ff649233.aspx

• WCF Security Fundamentals

• Authentication, Authorization, and Identities in WCF

• Impersonation and Delegation in WCF

• Message and Transport Security in WCF

Securing Services and Clients

http://msdn.microsoft.com/en-us/library/ms734736(v=VS.100).aspx

Message Security in WCF

http://msdn.microsoft.com/en-us/library/ms733137(v=VS.100).aspx

Use Transport Security and Message Credentials

http://msdn.microsoft.com/en-us/library/ms789011.aspx

Secure a Service with an X.509 Certificate

http://msdn.microsoft.com/en-us/library/ms788968.aspx

All of the content included in these links belongs to those companies and/or individuals. I am only providing links to the content as a way to find all of the information in one place.

Software Architect - Review and validate software design and architecture. Provide technical guidance and direction for software development best practices.

System Architect - Review and recommend best practice system architecture for core enterprise systems.

Enterprise Architect - Evaluate and determine functional, physical, and logical perspectives of core enterprise systems.

Thursday, November 11, 2010

Tuesday, September 28, 2010

Microsoft SQL Server 2008 R2 Express with Management Studio - SQL Azure - Cloud Services

To my surprise, installing Microsoft SQL Server 2008 R2 Express with Management Studio allows integration with SQL Azure and Cloud Services.

How nice that you do not have to run queries to get the table definitions.

Very Cool!!!!

- Full Database List

- Tables

- Stored Procedures

How nice that you do not have to run queries to get the table definitions.

Very Cool!!!!

Wednesday, August 18, 2010

Friday, August 6, 2010

VS2010 - New Features - Microsoft Enterprise Library 5.0 – April 2010

To save everyone some time I am simply re-listing the new features added to the Enterprise Library 5.0 release in April 2010 by Microsoft. The full article can be found here: http://msdn.microsoft.com/en-us/library/ff632023.aspx

New in the Enterprise Library 5.0

This major release of Enterprise Library contains many compelling new features and updates that will make developers more productive. There are no new blocks; instead the team focused on making the existing blocks shine, on testability, maintainability and learnability. The new features include:- Major architectural refactoring that provides improved testability and maintainability through full support of the dependency injection style of development

- Dependency injection container independence (Unity ships with Enterprise Library, but you can replace Unity with a container of your choice)

- Programmatic configuration support, including a fluent configuration interface and an XSD schema to enable IntelliSense

- Redesign of the configuration tool to provide:

- A more usable and intuitive look and feel

- Extensibility improvements through meta-data driven configuration visualizations that replace the requirement to write design time code

- A wizard framework that can help to simplify complex configuration tasks

- Data accessors for more intuitive processing of data query results

- Asynchronous data access support

- Honoring validation attributes between Validation Application Block attributes and DataAnnotations

- Integration with Windows Presentation Foundation (WPF) validation mechanisms

- Support for complex configuration scenarios, including additive merge from multiple configuration sources and hierarchical merge

- Optimized cache scavenging

- Better performance when logging

- Support for the .NET 4.0 Framework and integration with Microsoft Visual Studio 2010

- Improvements to Unity

- A reduction of the number of assemblies

Thursday, July 29, 2010

Visual Studio 2010 - MVC 3 and DLR - Great New Feature

MVC 3 and the use of the Dynamic Keyword and DLR Infrastructure

As I already blogged about the dynamic keyword and it's power, what was very interesting was to see how MVC 3 leverages the DLR infrastructure that is now included with .NET 4.0.

What was very cool was to see some of the new features of MVC 3 leveraging things such as strongly typed views in the controller. Before your code may have looked like this ViewData["Mydata"] = "Sean's Data".

With MVC 3 you now have another object called ViewModel.YourField. Where "YourField" would represent a dynamic value of any attribute on your model that is determined at runtime. Very cool!!! The only downside is that you do not have the intelli-sense, but given that you can flexibility a very nice feature added.

DLR Sidebar

So what is the DLR???? DLR stands for Dynamic Language Runtime and runs on top of the CLR or Common Language Runtime. A very nice read on the DLR. http://msdn.microsoft.com/en-us/magazine/ff796223.aspx.

Also included is a link to my blog entry for the dynamic keyword. .NET 4.0 "dynamic" keyword versus, "var" keyword - anonymous types

As I already blogged about the dynamic keyword and it's power, what was very interesting was to see how MVC 3 leverages the DLR infrastructure that is now included with .NET 4.0.

What was very cool was to see some of the new features of MVC 3 leveraging things such as strongly typed views in the controller. Before your code may have looked like this ViewData["Mydata"] = "Sean's Data".

With MVC 3 you now have another object called ViewModel.YourField. Where "YourField" would represent a dynamic value of any attribute on your model that is determined at runtime. Very cool!!! The only downside is that you do not have the intelli-sense, but given that you can flexibility a very nice feature added.

DLR Sidebar

So what is the DLR???? DLR stands for Dynamic Language Runtime and runs on top of the CLR or Common Language Runtime. A very nice read on the DLR. http://msdn.microsoft.com/en-us/magazine/ff796223.aspx.

Also included is a link to my blog entry for the dynamic keyword. .NET 4.0 "dynamic" keyword versus, "var" keyword - anonymous types

Monday, July 26, 2010

Visual Studio 2010 - Windows Phone 7 Development - Quick and Easy

Well well well, Windows Phone 7. Hmmmmm. What to expect. Interestingly enough, a very big surprise. The ease of development for Windows Phone 7 is far better than I have seen in the past, especially with the setup and the emulators. Aside from the extremely long SDK installation, the integration of the SDK into Visual Studio 2010's IDE was worth the wait. The design time components are drag and drop and fully functional with Silverlight. Overall very nice!!!!

The design time IDE looks like this:

To much of a surprise to me, I was able to code a very simple lint brush application which I plan to publish on the Market Place once the application is approved very quickly.

The end result from my perspective is that Windows Phone 7 from the SDK and Visual Studio 2010 integration standpoint seem very promising.

Nice!!!!!!

Wednesday, July 21, 2010

Visual Studio 2010 - AutoMapper, MVC 2 Entity Framework, ASP.NET 4.0

Very nice articles on Auto Mapping one set of objects to another. An example would be if you have EF objects that you want to map differently for independent views within MVC.

http://www.codeproject.com/Articles/50387/ASP-NET-MVC-ViewModel-Value-Formatting-using-AutoM.aspx

http://automapper.codeplex.com/wikipage?title=Getting%20Started&referringTitle=Home

http://perseus.franklins.net/dnrtvplayer/player.aspx?ShowNum=0155

http://www.codeproject.com/Articles/50387/ASP-NET-MVC-ViewModel-Value-Formatting-using-AutoM.aspx

http://automapper.codeplex.com/wikipage?title=Getting%20Started&referringTitle=Home

http://perseus.franklins.net/dnrtvplayer/player.aspx?ShowNum=0155

Tuesday, July 20, 2010

Nice Guide on How to Configure IIS for Silverlight

http://learn.iis.net/page.aspx/262/configuring-iis-for-silverlight-applications/

Friday, June 4, 2010

ADO.NET Entity Framework 4.0 - Does it work??? – Yes it does and below is an example in 6 easy steps.

The first question I had with the entity framework is if the framework actually worked. As many of us already use tools such as SubSonic, NHibernate, etc, we often question if the ADO.NET Entity Framework 4.0 will provide the same coding benefits as the other tools. To make the blog entry brief, the answer is yes. In fact, Microsoft .NET’s ADO.NET Entity Framework 4.0 exceeded my expectation in several areas where the other products either required some additional configuration or code to handle the same simple tasks.

6 Steps to using the Entity Framework based on an already existing database design

Step 1:

Create a Database (EntityTestDB). Then add a few tables such as Users, Roles and Profiles. Link the tables accordingly so that you build a relationship between the User, Role and Profile tables.

Step 2:

Create a New Web Project using Visual Studio 2010. (For this example, select the MVC 2 Template project). Once the project is created, Add a new folder called Business Layer with a Business Layer class in that folder.

Step 3:

After creating the base project and Web Solution you are now ready to use the ADO.NET Entity Framework. To do so, right click on the solution and click “Add New Item”. Click on the “Data Template” Item on the left and then select “ADO.NET Entity Data Model”. For this Step Name your Entity “EntityTest” and click “Add”.

At this point a wizard will be shown with a number of automated steps to assist you with creating the necessary items for your project.

At this point there are several items to be aware of that are very valuable. First is the Table Mapping Details. (Just “Right Click” on the Model Object and Select “Table Mapping”)

The second is the Mapping Details for your Operations such as Insert, Update, and Delete. (Just click on the other icon on the left in the Window showing the Mapping Details.

Step 4:

Create a Select Function and map this to the stored procedure we created in Step 1 to retrieve the User, Profile and Role data from the database. A quick way to perform this step is to use the Entity Model Browser. To view the Entity Model Browser “Click on View” from the menu bar, then Other Windows, then “Entity Model Browser”.

Note:

The entity browser will only show you your entity details if you have selected the entity model from Solution Explorer.

Next you will Navigate in the Entity Model Browser to the “Stored Procedures” section. Then expand that window and “Right Click” on the stored procedure to select the User, Role and Profile information. Then click on “Add Function Import”.

For our example we would like to return a list of Users so when the Wizard starts, make sure to select the User entity as the return type and click “OK”.

Step 5:

Now we are ready to use our Entities, Models and call our Stored procedure. Navigate to the Business Layer and create a method named “TestEntityFramework” in the class.

Note:

For this example it does not matter what is returns or anything we are just showing the functionality. If you like you can add a grid to the screen and bind the return data to the screen.

Add the following code to the sample:

Step 6:

From your sample make a call to the “TestEntityFramework” method and view the results.

SUMMARY

In summary, Microsoft’s Entity Framework 4.0, the Entity Model Browser and the overall functionality seems to be on par if not better than the other tools that have been used in the past. The advantages that I noticed were the ability to easily configure the entity framework for LINQ to SQL, Stored Procedures, etc. In addition, the relational database mapping of the objects is awesome. There are many more reasons to use this over other tools, and this was more of a tutorial on how to use the ADO.NET Entity Framework 4.0 in Visual Studio 2010 then an evaluation of one tool-set over another.

Enjoy!!!!

6 Steps to using the Entity Framework based on an already existing database design

Step 1:

Create a Database (EntityTestDB). Then add a few tables such as Users, Roles and Profiles. Link the tables accordingly so that you build a relationship between the User, Role and Profile tables.

Step 2:

Create a New Web Project using Visual Studio 2010. (For this example, select the MVC 2 Template project). Once the project is created, Add a new folder called Business Layer with a Business Layer class in that folder.

Step 3:

After creating the base project and Web Solution you are now ready to use the ADO.NET Entity Framework. To do so, right click on the solution and click “Add New Item”. Click on the “Data Template” Item on the left and then select “ADO.NET Entity Data Model”. For this Step Name your Entity “EntityTest” and click “Add”.

At this point a wizard will be shown with a number of automated steps to assist you with creating the necessary items for your project.

- First will be to choose the model contents. For our example we are going to use the database we created in the first step so select “Generate from Database” and click “Next”.

- Next either create a new connection or connect to the location where you created your database in Step 1. Then proceed to the next step in the Wizard. (You can leave the default checkboxes for this step.)

- Next you are going to choose what objects you would like for your Model. For our example select them all and click “Finish”. “Tables, Views, and Stored Procedures”. (You can leave the default checkboxes for this step.)

- When the Wizard finishes you will be presented with a Model View of your tables.

At this point there are several items to be aware of that are very valuable. First is the Table Mapping Details. (Just “Right Click” on the Model Object and Select “Table Mapping”)

The second is the Mapping Details for your Operations such as Insert, Update, and Delete. (Just click on the other icon on the left in the Window showing the Mapping Details.

Step 4:

Create a Select Function and map this to the stored procedure we created in Step 1 to retrieve the User, Profile and Role data from the database. A quick way to perform this step is to use the Entity Model Browser. To view the Entity Model Browser “Click on View” from the menu bar, then Other Windows, then “Entity Model Browser”.

Note:

The entity browser will only show you your entity details if you have selected the entity model from Solution Explorer.

Next you will Navigate in the Entity Model Browser to the “Stored Procedures” section. Then expand that window and “Right Click” on the stored procedure to select the User, Role and Profile information. Then click on “Add Function Import”.

For our example we would like to return a list of Users so when the Wizard starts, make sure to select the User entity as the return type and click “OK”.

Step 5:

Now we are ready to use our Entities, Models and call our Stored procedure. Navigate to the Business Layer and create a method named “TestEntityFramework” in the class.

Note:

For this example it does not matter what is returns or anything we are just showing the functionality. If you like you can add a grid to the screen and bind the return data to the screen.

Add the following code to the sample:

Step 6:

From your sample make a call to the “TestEntityFramework” method and view the results.

SUMMARY

In summary, Microsoft’s Entity Framework 4.0, the Entity Model Browser and the overall functionality seems to be on par if not better than the other tools that have been used in the past. The advantages that I noticed were the ability to easily configure the entity framework for LINQ to SQL, Stored Procedures, etc. In addition, the relational database mapping of the objects is awesome. There are many more reasons to use this over other tools, and this was more of a tutorial on how to use the ADO.NET Entity Framework 4.0 in Visual Studio 2010 then an evaluation of one tool-set over another.

Enjoy!!!!

Wednesday, June 2, 2010

Wednesday, May 12, 2010

.NET 4.0 "dynamic" keyword versus, "var" keyword - anonymous types

The "dynamic" keyword in .NET 4.0.

What is really cool about this keyword is that the actual type attached to your variables is applied at run-time without needing to worry about properties, methods, etc. Very cool. Below will be an explanation of this in a little more detail.

The "var" keyword

First you may think that this is just another way to implement the "var" keyword, but this is not true. The "var" keyword is very different then the "dynamic" keyword. The "var" keyword does not have the ability to be passed as a parameter to a method or as a return type.

Now one could argue that you can do this by boxing or why you would want to do this in the first place, but this is purely just to show the differences. In addition, the "var" keyword is strictly typed so when the compiler reaches this line of code it will perform the proper type casting for you and determine if the expression is correct.

For example the following line of code will show a compile error as highlighted in red:

Because the compiler is strictly typing this anonymous type it determines that the method does not exist and causes an error when trying to compile. Now you can get around this by using reflection or an object, but for this example it is just to show the differences, not so much how to make another way work or not work.

Now let's take a look at the "dynamic" keyword.

For this example we will take the same example as above and see if this compiles using a dynamic anonymous type. Furthermore, we will even call a method that we know does not exist in the class.

As you can see with this example the compiler does not check this anonymous type at all or the properties or methods to see if they exist. It will however dynamically type cast this at run-time.

Summary

In summary, the "var" keyword is an anonymous type and will always be derived from the base object class directly. Whereas, the "dynamic" type will be handled at run-time allowing for more flexibility at the cost of intellisense.

With all that said, this could be looked at as very dangerous coding. However, when using objects that are dynamic this could be very handy if used properly. I could think of a few areas to use this, such as with Web Services where you do not have the WSDL yet, but would like to complete the coding, or legacy COM objects where the properties are dynamic themselves.

I plan to perform some benchmark tests to see if using this has performance impacts and will update my blog when finished.

All comments are welcome.

What is really cool about this keyword is that the actual type attached to your variables is applied at run-time without needing to worry about properties, methods, etc. Very cool. Below will be an explanation of this in a little more detail.

The "var" keyword

First you may think that this is just another way to implement the "var" keyword, but this is not true. The "var" keyword is very different then the "dynamic" keyword. The "var" keyword does not have the ability to be passed as a parameter to a method or as a return type.

Now one could argue that you can do this by boxing or why you would want to do this in the first place, but this is purely just to show the differences. In addition, the "var" keyword is strictly typed so when the compiler reaches this line of code it will perform the proper type casting for you and determine if the expression is correct.

For example the following line of code will show a compile error as highlighted in red:

Because the compiler is strictly typing this anonymous type it determines that the method does not exist and causes an error when trying to compile. Now you can get around this by using reflection or an object, but for this example it is just to show the differences, not so much how to make another way work or not work.

Now let's take a look at the "dynamic" keyword.

For this example we will take the same example as above and see if this compiles using a dynamic anonymous type. Furthermore, we will even call a method that we know does not exist in the class.

As you can see with this example the compiler does not check this anonymous type at all or the properties or methods to see if they exist. It will however dynamically type cast this at run-time.

Summary

In summary, the "var" keyword is an anonymous type and will always be derived from the base object class directly. Whereas, the "dynamic" type will be handled at run-time allowing for more flexibility at the cost of intellisense.

With all that said, this could be looked at as very dangerous coding. However, when using objects that are dynamic this could be very handy if used properly. I could think of a few areas to use this, such as with Web Services where you do not have the WSDL yet, but would like to complete the coding, or legacy COM objects where the properties are dynamic themselves.

I plan to perform some benchmark tests to see if using this has performance impacts and will update my blog when finished.

All comments are welcome.

Tuesday, May 11, 2010

TFS 2010 server licensing: It's included in MSDN subscriptions

Very good news for the development community. It appears as though Team Foundation Server and Team System are now included with an MSDN subscription. Great information can be found from the MSDN blog site.

TFS 2010 server licensing: It's included in MSDN subscriptions

Cited from "Buck Hodges" blog on MSDN.

TFS 2010 server licensing: It's included in MSDN subscriptions

Cited from "Buck Hodges" blog on MSDN.

Thursday, May 6, 2010

Microsoft SQL Azure Quick Tips Link

This is a great link to a number of exercises you may need to do with SQL Azure.

http://blogs.msdn.com/sqlazure/archive/2010/05/06/10007449.aspx

The main one I was interested in was the one related to SSIS.

Exercise 3: Using SSIS for Data Import and Export

http://blogs.msdn.com/sqlazure/archive/2010/05/06/10007449.aspx

The main one I was interested in was the one related to SSIS.

Exercise 3: Using SSIS for Data Import and Export

Windows Azure - Dr. Watson Diagnostic ID - Error and Potential Solution

I noticed that when using the Staging environment within Windows Azure that the GUID or unique ID that was created for the deployment may in some cases become corrupt.

I found a work around for this issue by simply stopping the service, deleting it, and then re-adding the service back again. What I found is that in some cases the deployment ID or package can become corrupt and when you upgrade the package and config files the site is still in operable.

I received many different errors during the process, such as Dr Watson, Role Not found, etc. Once I recreated the entire staging service everything was fine.

Just figured I would post this if others notice this similar issue. I also, noticed that the Azure service re-purposed another Staging URL(GUID) from another client as I was able to see certain information that was not related to my site at all. Once the refresh was in affect the content was updated correctly, but while the update was happening I saw the other site's content.

I found a work around for this issue by simply stopping the service, deleting it, and then re-adding the service back again. What I found is that in some cases the deployment ID or package can become corrupt and when you upgrade the package and config files the site is still in operable.

I received many different errors during the process, such as Dr Watson, Role Not found, etc. Once I recreated the entire staging service everything was fine.

Just figured I would post this if others notice this similar issue. I also, noticed that the Azure service re-purposed another Staging URL(GUID) from another client as I was able to see certain information that was not related to my site at all. Once the refresh was in affect the content was updated correctly, but while the update was happening I saw the other site's content.

Wednesday, May 5, 2010

Errors with Microsoft SQL Azure Data Sync Tools

SQL Azure Data Sync Microsoft Error Information

ONCE MICROSOFT Provides me with a solution or I figure out how to resolve this issue, I will update the

blog accordingly.

1. Detailed description of the issues that you are experiencing ?

a. I am unable to use the Azure Data Sync Tool to synchronize data from my local database to SQL Azure. I receive several errors such as file not found and app error.

2. What operations were performed before seeing this issue?

a. I get to the point where the utility is about to synchronize the data after I select my tables and order it fails.

6. Please send us the screen shots of the errors which will help us to expedite investigation.

System.IO.FileNotFoundException was unhandled

Message=Could not load file or assembly 'Microsoft.Synchronization.Data, Version=3.0.0.0, Culture=neutral, PublicKeyToken=89845dcd8080cc91' or one of its dependencies. The system cannot find the file specified.

Source=PublishWizard

FileName=Microsoft.Synchronization.Data, Version=3.0.0.0, Culture=neutral, PublicKeyToken=89845dcd8080cc91

FusionLog=WRN: Assembly binding logging is turned OFF.

To enable assembly bind failure logging, set the registry value [HKLM\Software\Microsoft\Fusion!EnableLog] (DWORD) to 1.

Note: There is some performance penalty associated with assembly bind failure logging.

To turn this feature off, remove the registry value [HKLM\Software\Microsoft\Fusion!EnableLog].

StackTrace:

at DNBSoft.WPF.ProceedureDialog.ProceedureDialog.finishButton_Click(Object sender, RoutedEventArgs e)

at System.Windows.RoutedEventHandlerInfo.InvokeHandler(Object target, RoutedEventArgs routedEventArgs)

at System.Windows.EventRoute.InvokeHandlersImpl(Object source, RoutedEventArgs args, Boolean reRaised)

at System.Windows.UIElement.RaiseEventImpl(DependencyObject sender, RoutedEventArgs args)

at System.Windows.UIElement.RaiseEvent(RoutedEventArgs e)

at System.Windows.Controls.Primitives.ButtonBase.OnClick()

at System.Windows.Controls.Button.OnClick()

at System.Windows.Controls.Primitives.ButtonBase.OnMouseLeftButtonUp(MouseButtonEventArgs e)

at System.Windows.UIElement.OnMouseLeftButtonUpThunk(Object sender, MouseButtonEventArgs e)

at System.Windows.Input.MouseButtonEventArgs.InvokeEventHandler(Delegate genericHandler, Object genericTarget)

at System.Windows.RoutedEventArgs.InvokeHandler(Delegate handler, Object target)

at System.Windows.RoutedEventHandlerInfo.InvokeHandler(Object target, RoutedEventArgs routedEventArgs)

at System.Windows.EventRoute.InvokeHandlersImpl(Object source, RoutedEventArgs args, Boolean reRaised)

at System.Windows.UIElement.ReRaiseEventAs(DependencyObject sender, RoutedEventArgs args, RoutedEvent newEvent)

at System.Windows.UIElement.CrackMouseButtonEventAndReRaiseEvent(DependencyObject sender, MouseButtonEventArgs e)

at System.Windows.UIElement.OnMouseUpThunk(Object sender, MouseButtonEventArgs e)

at System.Windows.Input.MouseButtonEventArgs.InvokeEventHandler(Delegate genericHandler, Object genericTarget)

at System.Windows.RoutedEventArgs.InvokeHandler(Delegate handler, Object target)

at System.Windows.RoutedEventHandlerInfo.InvokeHandler(Object target, RoutedEventArgs routedEventArgs)

at System.Windows.EventRoute.InvokeHandlersImpl(Object source, RoutedEventArgs args, Boolean reRaised)

at System.Windows.UIElement.RaiseEventImpl(DependencyObject sender, RoutedEventArgs args)

at System.Windows.UIElement.RaiseEvent(RoutedEventArgs args, Boolean trusted)

at System.Windows.Input.InputManager.ProcessStagingArea()

at System.Windows.Input.InputManager.ProcessInput(InputEventArgs input)

at System.Windows.Input.InputProviderSite.ReportInput(InputReport inputReport)

at System.Windows.Interop.HwndMouseInputProvider.ReportInput(IntPtr hwnd, InputMode mode, Int32 timestamp, RawMouseActions actions, Int32 x, Int32 y, Int32 wheel)

at System.Windows.Interop.HwndMouseInputProvider.FilterMessage(IntPtr hwnd, Int32 msg, IntPtr wParam, IntPtr lParam, Boolean& handled)

at System.Windows.Interop.HwndSource.InputFilterMessage(IntPtr hwnd, Int32 msg, IntPtr wParam, IntPtr lParam, Boolean& handled)

at MS.Win32.HwndWrapper.WndProc(IntPtr hwnd, Int32 msg, IntPtr wParam, IntPtr lParam, Boolean& handled)

at MS.Win32.HwndSubclass.DispatcherCallbackOperation(Object o)

at System.Windows.Threading.ExceptionWrapper.InternalRealCall(Delegate callback, Object args, Boolean isSingleParameter)

at System.Windows.Threading.ExceptionWrapper.TryCatchWhen(Object source, Delegate callback, Object args, Boolean isSingleParameter, Delegate catchHandler)

at System.Windows.Threading.Dispatcher.WrappedInvoke(Delegate callback, Object args, Boolean isSingleParameter, Delegate catchHandler)

at System.Windows.Threading.Dispatcher.InvokeImpl(DispatcherPriority priority, TimeSpan timeout, Delegate method, Object args, Boolean isSingleParameter)

at System.Windows.Threading.Dispatcher.Invoke(DispatcherPriority priority, Delegate method, Object arg)

at MS.Win32.HwndSubclass.SubclassWndProc(IntPtr hwnd, Int32 msg, IntPtr wParam, IntPtr lParam)

at MS.Win32.UnsafeNativeMethods.DispatchMessage(MSG& msg)

at System.Windows.Threading.Dispatcher.PushFrameImpl(DispatcherFrame frame)

at System.Windows.Threading.Dispatcher.PushFrame(DispatcherFrame frame)

at System.Windows.Threading.Dispatcher.Run()

at System.Windows.Application.RunDispatcher(Object ignore)

at System.Windows.Application.RunInternal(Window window)

at System.Windows.Application.Run(Window window)

at SampleApplication.EntryPoint.Main(String[] args)

InnerException:

Again once I will make updates to this post once I determine a solution or Microsoft provides one to me.

Tuesday, May 4, 2010

Accessing Data in SQL Azure

In a traditional on-premise application, the application code and database are located in the same physical data center. SQL Azure and the Windows Azure platform offer many alternatives to that architecture. The following diagram demonstrates two generalized alternatives available for how your application can access data with SQL Azure.

In Scenario A on the left, your application code remains on the premises of your corporate data center, but the database resides in SQL Azure. Your application code uses client libraries to access your database(s) in SQL Azure. For more information about the client libraries that are available, see Guidelines and Limitations (SQL Azure Database). Regardless of the client library chosen, data is transferred using tabular data stream (TDS) over a secure sockets layer (SSL).

In Scenario B on the right, your application code is hosted in the Windows Azure and your database resides in SQL Azure. Your application can use the same client libraries to access your database(s) in SQL Azure as are available in Scenario A. There are many different types of applications that you can host in the Windows Azure platform.

The Scenario B client premises may represent an end user's Web browser that is used to access your Web application. The Scenario B client premises may also be a desktop or Silverlight application that uses the benefits of the Entity Data Model and the WCF Data Services client to access your data that is hosted in SQL Azure.

For more information about the SQL Azure architecture, see SQL Azure Architecture.

Cited from Microsoft's Site: http://msdn.microsoft.com/en-us/library/ee336239.aspx

Friday, April 30, 2010

Migrating from ASP.Net MVC 1 to ASP.Net MVC 2

A pretty good write-up on some of the new feature and/or techniques provided with MVC 2.

http://www.datasprings.com/Resources/ArticlesInformation/MigratingfromASPNetMVC1toASPNetMVC2.aspx

The top categories of MVC2:

http://www.datasprings.com/Resources/ArticlesInformation/MigratingfromASPNetMVC1toASPNetMVC2.aspx

The top categories of MVC2:

- Areas

- Templated Helpers

- Asynchronous Controller

- RenderAction/Action

- Validation Options

Tuesday, April 27, 2010

Windows Azure Tools - Development Storage Service Error and Solution

If you are receiving this error:

indicating that the Windows Azure Tools - Development Storage Service failed to initialize, then please perform the following steps to resolve the error. Aperently by default the service is searching for SQLExpress.

Run Windows Azure SDK Command Prompt (as Administrator)

indicating that the Windows Azure Tools - Development Storage Service failed to initialize, then please perform the following steps to resolve the error. Aperently by default the service is searching for SQLExpress.

Run Windows Azure SDK Command Prompt (as Administrator)

Enter DSInit /sqlinstance:[your sql instance name]

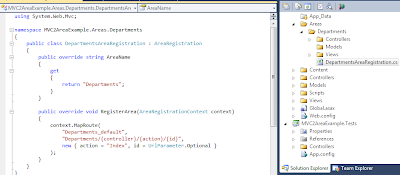

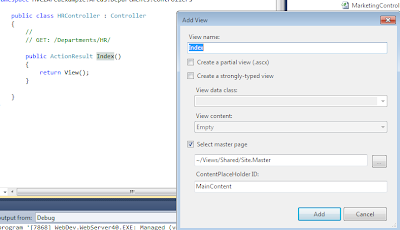

A Quick 8 Step Example on How to Add an Area using ASP.NET 4.0 MVC 2 and Visual Studio 2010

Step 1:

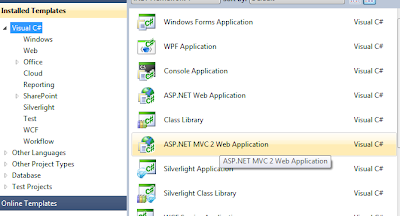

Open Visual Studio 2010 and Select “New Project”, ASP.NET MVC2 Web Application

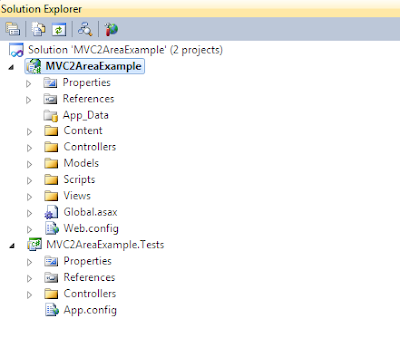

Once the project is created it will look similar to what is shown below.

Step2:

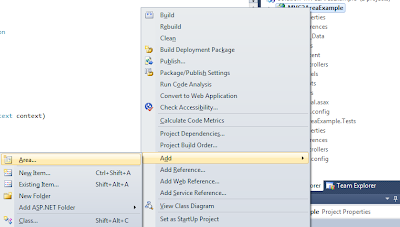

In the Solution Explorer Window Right Click on the Project File and Click “Add”, then click “Area”

Step3:

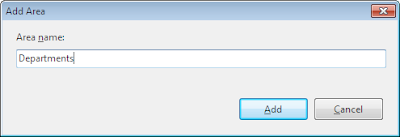

Enter the Area Name such as “Departments”

Note:

An example of an Area is shown below.

Code Snippet:

public class DepartmentsAreaRegistration : AreaRegistration

{

public override string AreaName

{

get

{

return "Departments";

}

}

public override void RegisterArea(AreaRegistrationContext context)

{

context.MapRoute(

"Departments_default",

"Departments/{controller}/{action}/{id}",

new { action = "Index", id = UrlParameter.Optional }

);

}

}

In addition to this code there is code in the “Global.asax” that is registering your areas as highlighted below.

protected void Application_Start()

{

AreaRegistration.RegisterAllAreas();

RegisterRoutes(RouteTable.Routes);

}

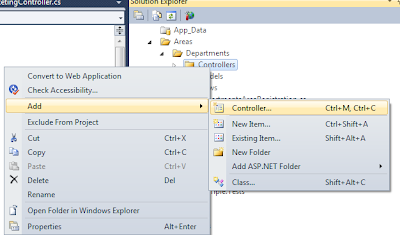

Step4:

In the Solution Explorer Window Right Click on the Areas/[YourArea]/Controllers and Click “Add”, then click “Controller”. For this example the path would be “Areas/Departments/Controllers/”

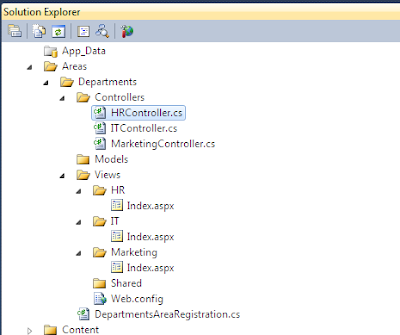

Step 5:

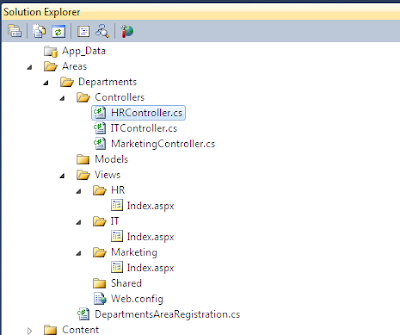

REPEAT STEP 4 FOR EACH CONTROLLER NEEDED. For this example create (3) three HR, IT, and Marketing.

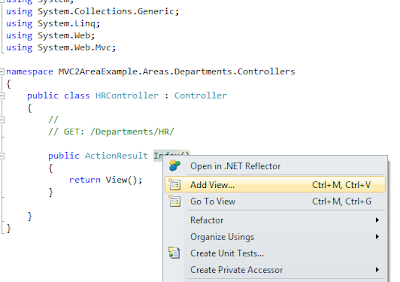

Step6:

Next Add a View by right-clicking on the "Index" method in the appropriate controller and selecting “Add View”.

Step7:

REPEAT STEP 6 FOR EACH VIEW NEEDED. For this example create (3) three one for each respective controller.

Note:

Once this is complete you will now see the appropriate structure located in the Views Folder in the Solution Explorer.

Step8:

Run the project and navigate to the appropriate location:

http://localhost:[yourport]/Departments/HR

http://localhost:[yourport]/Departments/IT

http://localhost:[yourport]/Departments/Marketing

Hopefully this guide was useful as a quick 8 Step dive into the New "Areas" introduced with Microsoft Visual Studio 2010, ASP.NET and MVC 2.

Monday, April 26, 2010

Windows Azure Platform Benefits for MSDN Subscribers

Included is a link to Microsoft's site for this information.

Windows Azure Platform Benefits for MSDN Subscribers

(from Microsoft's Site - All content is theirs.)Tuesday, April 20, 2010

Visual Studio 2010 - Publish Details and Output for detailed troubleshooting

Have you ever had Visual Studio tell you your web application publish failed, but never gives you a reason why? You’re not alone. I’ve been putting off looking into this issue on a project and just found a way to get my answer right away.

Visual Studio 2010 Publish Fails

Here’s what you should do inside of Visual Studio to find out why your publish failed.

- Click the Tools menu > then Options to bring up the Options dialog.

- Expand ‘Projects and Solutions’ on the left and click General

- On General, click ‘Show Output window when build starts’

- Now click on ‘Build and Run’ in the left tree

- Next select a value from the ‘MSBuild project build output verbosity’ drop down menu

- OK your way back to the main Visual Studio window

- The next time you Build or Publish your project, you should see the Output panel pop up. If your publish is still failing, the answer will live inside the Output panel

Windows7, Vault, and SQL Server 2008 Issues - Resolution

If you are getting the following error, then try these steps to correct the issue.

regsvr32 actxprxy.dll

If that does not work try:

for %1 in (%windir%\system32\*.dll) do regsvr32 /s %1

for %1 in (%windir%\system32\*.ocx) do regsvr32 /s %1

If that still does not work try this as a final step.

http://support.sourcegear.com/viewtopic.php?f=5&t=12680&p=52790&hilit=management+studio#p52790

x86: regsvr32 "C:\Program Files\Internet Explorer\ieproxy.dll"

x64: regsvr32 "C:\Program Files (x86)\Internet Explorer\ieproxy.dll"

Interestingly enough I had to perform all of these steps to correct the issue.

Sunday, April 18, 2010

Software Architecture Assessment Outline

The primary goal of this software architecture assessment outline is to help identify and recommend how to increase the software quality while lessening the overall maintenance needs from both software and hardware perspectives. To perform this assessment several major categories will be reviewed in combination with a weighted measurement factor for each category and rolled up into one final summary.

General Software Architecture Assessment Categories

Included in this outline are further definitions of each of the software assessment categories evaluated. Within each section is a detailed explanation of the category relevant to this document, a design /architectural principles section, measurement factors, and a score summary.

For this outline only a high-level software architecture assessment is being applied. However, the measurement factors will most likely be evaluated as a part of a more detailed architectural analysis. For the purpose of this assessment, the measurement factors were considered, but not effectively evaluated in all code modules.

Performance

The performance of an application is generally categorized as how well the application responds to simultaneous events. The performance can also be viewed as how well the application responds after a certain interval of time.

Design/Architectural Principles Evaluated

• Connection Pooling

• Load Balancing

• Distributed Processing

• Caching

• Object Instantiation

• Transaction concurrency

• Process Isolation

• Replication of Data

Measurement Factors

• Transactions per unit time

• Amount of time it takes to complete a transaction

Reliability

Application reliability represents how well the system continues to operate over time in the context of application and system errors in situations of unexpected or incorrect usage. The reliability of the system can be viewed as how well overall the system performs based on a predictable set of factors.

Design/Architectural Principles Evaluated

• Using preventive measures

o Recycling of server processes in IIS.6 ASP.NET /COM+ 1.5

o Containment - COM+ server process isolation

o Database transaction logs (rollback)

Measurement Factors

• Mean-time to failure

Availability

Availability refers to the ability of the user community to access the system, whether to submit new work, update or alter existing work, or collect the results of previous work. If a user cannot access the system, it is said to be unavailable. Generally, the term downtime is used to refer to periods when a system is unavailable.

Design/Architectural Principles Evaluated

• Fail-over

• Transaction Manager

• Stateless Design

Measurement Factors

• Length of time between failures

• How quickly the system is able to resume operation in the event of failure.

Security

Application security encompasses measures taken to prevent exceptions in the security policy of an application or the underlying system (vulnerabilities) through flaws in the design, development, or deployment of the application.

Applications only control the use of resources granted to them, and not which resources are granted to them. They, in turn, determine the use of these resources by users of the application through application security.

Design/Architectural Principles Evaluated

• Authorization

• Authentication

• Auditing

• Integrity

• Confidentiality

• Denial-of-service

• Data Isolation

Measurement Factors

• N/A

Portability

Portability is one of the key concepts of high-level programming. Portability is the software codebase feature to be able to reuse the existing code instead of creating new code when moving software from an environment to another. The pre-requirement for portability is the generalized abstraction between the application logic and system interfaces. When one is targeting several platforms with the same application, portability is the key issue for development cost reduction

Design/Architectural Principles Evaluated

• Virtual machines

• Functionality

Measurement Factors

• Number change request

Change Management

The change management process in systems engineering is the process of requesting, determining attainability, planning, implementing and evaluation of changes to a system. There are two main goals concerning change management. The main goals include, supporting the processing of changes, and enabling traceability of changes, which should be possible through proper execution of the process of the system or application.

Design/Architectural Principles Evaluated

• Client-Server

• Independence of interface from implementation

• Strategy Separation

• Encoding function into data meta-data and language interpreters

• Runtime Discovery

Measurement Factors

• Using specific changes as benchmarks and recording how expensive those changes are to implement

Extensibility

In software engineering, extensibility (sometimes confused with forward compatibility) is a system design principle where the implementation takes into consideration future growth. It is a systemic measure of the ability to extend a system and the level of effort required to implement the extension. Extensions can be through the addition of new functionality or through modification of existing functionality. The central theme is to provide for change while minimizing impact to existing system functions.

In systems architecture, extensibility means the system is designed to include hooks and mechanisms for expanding/enhancing the system with new capabilities without having to make major changes to the system infrastructure. A good architecture provides the design principles to ensure this—a roadmap for that portion of the road yet to be built. Note that this usually means that capabilities and mechanisms must be built into the final delivery, which will not be used in that delivery and, indeed, may never be used. These excess capabilities are not frills, but are necessary for maintainability and for avoiding early obsolescence.

Design/Architectural Principles Evaluated

• Easy incremental additions of functionality

• Coupling/cohesion

• Conceptual Integrity

Measurement Factors

• N/A

Interoperability

Interoperability is a property referring to the ability of diverse systems and organizations to work together (inter-operate). The term is often used in a technical systems engineering sense, or alternatively in a broad sense, taking into account social, political, and organizational factors that impact system-to-system performance.

Design/Architectural Principles Evaluated

• Simple data-types

• XML

• RSS

• Web Services

• Windows Communication Foundation

• .Net Remoting

Measurement Factors

• General overview of service oriented architecture

Usability and Standards

Usability and software standards enable software to interoperate seamlessly and cohesively. Many things are (somewhat) arbitrary, so the important thing is that everyone agrees on what they are and represent within an organization. Usability and software standards are one of the unsolved problems in software engineering.

The key factor evaluated is the incorrect implementation of standards or specifications. Many organizations result in a requirement for implementation specific code and special case exceptions as a necessity for cross-platform interoperability. Notable modern examples include web browser compatibility and web-services interoperability. The arbitrariness of most software concepts, which is related to historical hardware and software implementation, lack of common standards worldwide, and economic pressures.

Design/Architectural Principles Evaluated

• User Interface Standards

• Coding Standards

• Deployment Standards

• Security Standards

• Database Standards

• Service Oriented Architecture Standards

Measurement Factors

• Number of errors made by a user familiar with prior releases or other members of the product line

Maintainability

In software testing, based on the definition given in ISO 9126, the ease with which a software product can be modified in order to, correct defects, meet new requirements, make future maintenance easier, or cope with a changed environment.

Design/Architectural Principles Evaluated

• Localization

• Globalization

• Effects of change

Measurement Factors

• N/A

Efficiency

In software engineering, the efficiency of an application is defined as how well the application has been coded in order to be efficient. The efficiency is often handled by acquiring resources during the initial load of an application such as a splash screen and then releasing resources throughout the application.

Design/Architectural Principles Evaluated

• Acquire late, release early

• Reducing round-trips

• Lowering traffic throughput

Measurement Factors

• N/A

Testability

The testability of an application refers to how easy it is to test and validate the code as a unit, sub-system or application. Using key tools such as automated testing tools for unit testing, white box and black box testing are also considered when evaluating the overall testability of a particular system.

Tests are applied at several steps in the hardware manufacturing flow and, for certain products, may be used for hardware maintenance in the customer’s environment. The tests generally are driven by test programs that execute in Automatic Test Equipment (ATE) or, in the case of system maintenance, inside the assembled system itself. In addition to finding and indicating the presence of defects (i.e., the test fails), tests may be able to log diagnostic information about the nature of the encountered test fails. The diagnostic information can be used to locate the source of the failure.

Testability plays an important role in the development of test programs and as an interface for test application and diagnostics. Automatic test pattern generation, or ATPG, is much easier if appropriate testability rules and suggestions have been implemented.

Design/Architectural Principles Evaluated

• Test plans

• Code implemented unit test scripts

• Build server automation

• Interface-based programming

• Inversion of control/Dependency injection

• Classes with well defined responsibilities

Measurement Factors

• N/A

Reusability

In computer science and software engineering, reusability is the likelihood a segment of source code can be used again to add new functionalities with slight or no modification. Reusable modules and classes reduce implementation time, increase the likelihood that prior testing and use has eliminated bugs and localizes code modifications when a change in implementation is required.

Subroutines or functions are the simplest form of reuse. A chunk of code is regularly organized using modules or namespaces into layers. Proponents claim that objects and software components offer a more advanced form of reusability, although it has been tough to objectively measure and define levels or scores of reusability.

The ability to reuse relies in an essential way on the ability to build larger things from smaller parts, and being able to identify commonalities among those parts. Reusability is often a required characteristic of platform software.

Reusability implies some explicit management of build, packaging, distribution, installation, configuration, deployment, and maintenance and upgrade issues. Software reusability more specifically refers to design features of a software element (or collection of software elements) that enhance its suitability for reuse.

Design/Architectural Principles Evaluated

• Code components are reusable

• Use Enterprise Libraries

• Use stored procedures

• Reuse of User Controls

• Reuse of Web User Controls

• Use of common services

• Use of business objects

Measurement Factors

• N/A

Ease of deployment

Software deployment is all of the activities that make a software system available for use. The general deployment process consists of several interrelated activities with possible transitions between them. These activities can occur at the producer site or at the consumer site or both. Because every software system is unique, the precise processes or procedures within each activity can hardly be defined. Therefore, "ease of deployment" can be interpreted as a general process that has been customized according to specific requirements or characteristics to aid and assist in the overall ease of deploying the specific software.

Design/Architectural Principles Evaluated

• Deployment mechanism

• Installation programs

• Automated updates

• Hot-fix deployment

Measurement Factors

• Can be measured by the time and resources required to install the product and /or distribute a new unit of functionality

Ease of administration

The ease of administration refers to the infrastructure, tools, and staff of administrators and technicians needed to maintain the health of the application. The ease of administration would include items such as being able to change the physical locations of services while having a minimal impact on the rest of the system.

Design/Architectural Principles Evaluated

• N/A

Measurement Factors

• Decreased Support Cost: can be measured by comparing number of help desk calls for a standard period of time

Scalability

In software engineering scalability is a desirable property of a system, a network, or a process, which indicates its ability to handle growing amounts of work in a graceful manner, or to be readily enlarged. For example, it can refer to the capability of a system to increase total throughput under an increased load when resources (typically hardware) are added.

Scalability, as a property of systems, is generally difficult to define and in any particular case, it is necessary to define the specific requirements for scalability on those dimensions, which are deemed important. An algorithm, design, networking protocol, program, or other system is said to scale if it is suitably efficient and practical when applied to large situations (e.g. a large input data set or large number of participating nodes in the case of a distributed system). If the design fails when the quantity increases then it does not scale.

The ability to support more users while maintaining the same level of performance, user demand, and business complexity would be considered scalable. The system must ale to extend the minimum hardware configuration needed for the application with additional hardware to support increased workloads.

Design/Architectural Principles Evaluated

• Stateless design

• Load-balancing

• Concurrency (optimistic)

• Serialization

Measurement Factors

• N/A

Debug-ability / Monitoring

Debugging and monitoring is a name for design techniques that add certain testability features to a microelectronic hardware product design. The premise of the added features is that they make it easier to develop and apply manufacturing tests for the designed hardware. The purpose of manufacturing tests is to validate that the product hardware contains no defects that could otherwise, adversely affect the products correct functioning.

Design/Architectural Principles Evaluated

• Tracing support

• Logging in exception handling mechanism

• Alerting/notification mechanism

General Software Architecture Assessment Categories

Included in this outline are further definitions of each of the software assessment categories evaluated. Within each section is a detailed explanation of the category relevant to this document, a design /architectural principles section, measurement factors, and a score summary.

For this outline only a high-level software architecture assessment is being applied. However, the measurement factors will most likely be evaluated as a part of a more detailed architectural analysis. For the purpose of this assessment, the measurement factors were considered, but not effectively evaluated in all code modules.

Performance

The performance of an application is generally categorized as how well the application responds to simultaneous events. The performance can also be viewed as how well the application responds after a certain interval of time.

Design/Architectural Principles Evaluated

• Connection Pooling

• Load Balancing

• Distributed Processing

• Caching

• Object Instantiation

• Transaction concurrency

• Process Isolation

• Replication of Data

Measurement Factors

• Transactions per unit time

• Amount of time it takes to complete a transaction

Reliability

Application reliability represents how well the system continues to operate over time in the context of application and system errors in situations of unexpected or incorrect usage. The reliability of the system can be viewed as how well overall the system performs based on a predictable set of factors.

Design/Architectural Principles Evaluated

• Using preventive measures

o Recycling of server processes in IIS.6 ASP.NET /COM+ 1.5

o Containment - COM+ server process isolation

o Database transaction logs (rollback)

Measurement Factors

• Mean-time to failure

Availability

Availability refers to the ability of the user community to access the system, whether to submit new work, update or alter existing work, or collect the results of previous work. If a user cannot access the system, it is said to be unavailable. Generally, the term downtime is used to refer to periods when a system is unavailable.

Design/Architectural Principles Evaluated

• Fail-over

• Transaction Manager

• Stateless Design

Measurement Factors

• Length of time between failures

• How quickly the system is able to resume operation in the event of failure.

Security

Application security encompasses measures taken to prevent exceptions in the security policy of an application or the underlying system (vulnerabilities) through flaws in the design, development, or deployment of the application.

Applications only control the use of resources granted to them, and not which resources are granted to them. They, in turn, determine the use of these resources by users of the application through application security.

Design/Architectural Principles Evaluated

• Authorization

• Authentication

• Auditing

• Integrity

• Confidentiality

• Denial-of-service

• Data Isolation

Measurement Factors

• N/A

Portability

Portability is one of the key concepts of high-level programming. Portability is the software codebase feature to be able to reuse the existing code instead of creating new code when moving software from an environment to another. The pre-requirement for portability is the generalized abstraction between the application logic and system interfaces. When one is targeting several platforms with the same application, portability is the key issue for development cost reduction

Design/Architectural Principles Evaluated

• Virtual machines

• Functionality

Measurement Factors

• Number change request

Change Management

The change management process in systems engineering is the process of requesting, determining attainability, planning, implementing and evaluation of changes to a system. There are two main goals concerning change management. The main goals include, supporting the processing of changes, and enabling traceability of changes, which should be possible through proper execution of the process of the system or application.

Design/Architectural Principles Evaluated

• Client-Server

• Independence of interface from implementation

• Strategy Separation

• Encoding function into data meta-data and language interpreters

• Runtime Discovery

Measurement Factors

• Using specific changes as benchmarks and recording how expensive those changes are to implement

Extensibility

In software engineering, extensibility (sometimes confused with forward compatibility) is a system design principle where the implementation takes into consideration future growth. It is a systemic measure of the ability to extend a system and the level of effort required to implement the extension. Extensions can be through the addition of new functionality or through modification of existing functionality. The central theme is to provide for change while minimizing impact to existing system functions.

In systems architecture, extensibility means the system is designed to include hooks and mechanisms for expanding/enhancing the system with new capabilities without having to make major changes to the system infrastructure. A good architecture provides the design principles to ensure this—a roadmap for that portion of the road yet to be built. Note that this usually means that capabilities and mechanisms must be built into the final delivery, which will not be used in that delivery and, indeed, may never be used. These excess capabilities are not frills, but are necessary for maintainability and for avoiding early obsolescence.

Design/Architectural Principles Evaluated

• Easy incremental additions of functionality

• Coupling/cohesion

• Conceptual Integrity

Measurement Factors

• N/A

Interoperability

Interoperability is a property referring to the ability of diverse systems and organizations to work together (inter-operate). The term is often used in a technical systems engineering sense, or alternatively in a broad sense, taking into account social, political, and organizational factors that impact system-to-system performance.

Design/Architectural Principles Evaluated

• Simple data-types

• XML

• RSS

• Web Services

• Windows Communication Foundation

• .Net Remoting

Measurement Factors

• General overview of service oriented architecture

Usability and Standards

Usability and software standards enable software to interoperate seamlessly and cohesively. Many things are (somewhat) arbitrary, so the important thing is that everyone agrees on what they are and represent within an organization. Usability and software standards are one of the unsolved problems in software engineering.

The key factor evaluated is the incorrect implementation of standards or specifications. Many organizations result in a requirement for implementation specific code and special case exceptions as a necessity for cross-platform interoperability. Notable modern examples include web browser compatibility and web-services interoperability. The arbitrariness of most software concepts, which is related to historical hardware and software implementation, lack of common standards worldwide, and economic pressures.

Design/Architectural Principles Evaluated

• User Interface Standards

• Coding Standards

• Deployment Standards

• Security Standards

• Database Standards

• Service Oriented Architecture Standards

Measurement Factors

• Number of errors made by a user familiar with prior releases or other members of the product line

Maintainability

In software testing, based on the definition given in ISO 9126, the ease with which a software product can be modified in order to, correct defects, meet new requirements, make future maintenance easier, or cope with a changed environment.

Design/Architectural Principles Evaluated

• Localization

• Globalization

• Effects of change

Measurement Factors

• N/A

Efficiency

In software engineering, the efficiency of an application is defined as how well the application has been coded in order to be efficient. The efficiency is often handled by acquiring resources during the initial load of an application such as a splash screen and then releasing resources throughout the application.

Design/Architectural Principles Evaluated

• Acquire late, release early

• Reducing round-trips

• Lowering traffic throughput

Measurement Factors

• N/A

Testability

The testability of an application refers to how easy it is to test and validate the code as a unit, sub-system or application. Using key tools such as automated testing tools for unit testing, white box and black box testing are also considered when evaluating the overall testability of a particular system.

Tests are applied at several steps in the hardware manufacturing flow and, for certain products, may be used for hardware maintenance in the customer’s environment. The tests generally are driven by test programs that execute in Automatic Test Equipment (ATE) or, in the case of system maintenance, inside the assembled system itself. In addition to finding and indicating the presence of defects (i.e., the test fails), tests may be able to log diagnostic information about the nature of the encountered test fails. The diagnostic information can be used to locate the source of the failure.

Testability plays an important role in the development of test programs and as an interface for test application and diagnostics. Automatic test pattern generation, or ATPG, is much easier if appropriate testability rules and suggestions have been implemented.

Design/Architectural Principles Evaluated

• Test plans

• Code implemented unit test scripts

• Build server automation

• Interface-based programming

• Inversion of control/Dependency injection

• Classes with well defined responsibilities

Measurement Factors

• N/A

Reusability

In computer science and software engineering, reusability is the likelihood a segment of source code can be used again to add new functionalities with slight or no modification. Reusable modules and classes reduce implementation time, increase the likelihood that prior testing and use has eliminated bugs and localizes code modifications when a change in implementation is required.

Subroutines or functions are the simplest form of reuse. A chunk of code is regularly organized using modules or namespaces into layers. Proponents claim that objects and software components offer a more advanced form of reusability, although it has been tough to objectively measure and define levels or scores of reusability.

The ability to reuse relies in an essential way on the ability to build larger things from smaller parts, and being able to identify commonalities among those parts. Reusability is often a required characteristic of platform software.

Reusability implies some explicit management of build, packaging, distribution, installation, configuration, deployment, and maintenance and upgrade issues. Software reusability more specifically refers to design features of a software element (or collection of software elements) that enhance its suitability for reuse.

Design/Architectural Principles Evaluated

• Code components are reusable

• Use Enterprise Libraries

• Use stored procedures

• Reuse of User Controls

• Reuse of Web User Controls

• Use of common services

• Use of business objects

Measurement Factors

• N/A

Ease of deployment

Software deployment is all of the activities that make a software system available for use. The general deployment process consists of several interrelated activities with possible transitions between them. These activities can occur at the producer site or at the consumer site or both. Because every software system is unique, the precise processes or procedures within each activity can hardly be defined. Therefore, "ease of deployment" can be interpreted as a general process that has been customized according to specific requirements or characteristics to aid and assist in the overall ease of deploying the specific software.

Design/Architectural Principles Evaluated

• Deployment mechanism

• Installation programs

• Automated updates

• Hot-fix deployment

Measurement Factors

• Can be measured by the time and resources required to install the product and /or distribute a new unit of functionality

Ease of administration

The ease of administration refers to the infrastructure, tools, and staff of administrators and technicians needed to maintain the health of the application. The ease of administration would include items such as being able to change the physical locations of services while having a minimal impact on the rest of the system.

Design/Architectural Principles Evaluated

• N/A

Measurement Factors

• Decreased Support Cost: can be measured by comparing number of help desk calls for a standard period of time

Scalability

In software engineering scalability is a desirable property of a system, a network, or a process, which indicates its ability to handle growing amounts of work in a graceful manner, or to be readily enlarged. For example, it can refer to the capability of a system to increase total throughput under an increased load when resources (typically hardware) are added.

Scalability, as a property of systems, is generally difficult to define and in any particular case, it is necessary to define the specific requirements for scalability on those dimensions, which are deemed important. An algorithm, design, networking protocol, program, or other system is said to scale if it is suitably efficient and practical when applied to large situations (e.g. a large input data set or large number of participating nodes in the case of a distributed system). If the design fails when the quantity increases then it does not scale.

The ability to support more users while maintaining the same level of performance, user demand, and business complexity would be considered scalable. The system must ale to extend the minimum hardware configuration needed for the application with additional hardware to support increased workloads.

Design/Architectural Principles Evaluated

• Stateless design

• Load-balancing

• Concurrency (optimistic)

• Serialization

Measurement Factors

• N/A

Debug-ability / Monitoring

Debugging and monitoring is a name for design techniques that add certain testability features to a microelectronic hardware product design. The premise of the added features is that they make it easier to develop and apply manufacturing tests for the designed hardware. The purpose of manufacturing tests is to validate that the product hardware contains no defects that could otherwise, adversely affect the products correct functioning.

Design/Architectural Principles Evaluated

• Tracing support

• Logging in exception handling mechanism

• Alerting/notification mechanism

Subscribe to:

Comments (Atom)